For years, the First Line of Defense (1LoD)—business units and front-line staff who own as well as manage risk—has operated under conflicting demands . Despite the rising investment in compliance technology, operational costs are still going up, besides that, the detection rates for sophisticated financial crime have not moved at all. The banks managed to trace only about 2% of the global financial crime flows; however, they still dedicate as much as 15% of their full-time staff to KYC/AML pursuits.

We are noticing that traditional methods based on rule automation and a large number of employees cannot do any more than what they have already done. The classic “Three Lines of Defense” model is having its difficulty to contain the asymmetric risks that hyper-digitalized markets and algorithmic scams present.

Yet we are amidst a major transformation. We are getting to the point of breaking the Generative AI hype and we are moving forward to the application of the AI Agentic–dedicated to autonomous reasoning, planning, and execution systems.

This shift is beyond just a software upgrade; it is rather a transition from the traditional static, reactive control environment to a proactive, real-time digital workforce.

From Generative Copilots to Agentic Action

To understand the strategic impact on the 1LoD, we first need to differentiate the tools that we currently have from the agentic systems that are being introduced to the market.

In essence, Generative AI (GenAI) is built to be reactive. It is programmed to wait for an input to summarize a regulatory filing or draft a credit memo. While it may be useful for the productivity of analysts, it does not possess “agency”—the right to make decisions independently of the systems to accomplish complex goals.

In contrast, Agentic AI is entirely focused on achieving objectives. It has the technical prowess to manage the multi-layered workflows with minimum human involvement. Instead of just suggesting a GenAI model might write an email about a suspicious transaction, an agentic system can find the discrepancy, halt the account, start a verification procedure, compare the customer’s reaction with the prior behavior statistics, and amend the core banking system―all done without any intervention from a person.

This shifts the 1LoD from a bottleneck of manual “hand-offs” to a “digital factory” where specialized agents pass context between departments instantly.

Transforming KYC – Transitioning to Always-on Compliance

The primary high-value usage of Agentic AI is in the area of Know Your Customer (KYC) and onboarding processes. Traditional onboarding is often a linear, friction-heavy process prone to delays. The productivity gains of Agentic AI in these fields are reaching 200% to 2,000% by implementing “squads” of specialized agents.

In a fully matured agentic workflow:

- Onboarding Agents harness computer vision to extract metadata from identity documents.

- Compliance Agents cross-verify this data against dynamic global sanctions lists and Politically Exposed Persons (PEP) databases within seconds.

- Risk Agents assess the likelihood of fraud according to device fingerprinting and behavioral history.

This combining of tasks speeds up the onboarding process from days to mere minutes. More significantly, it reflects the shift from periodic reviews (1, 3, or 5-year cycles) to Perpetual KYC (pKYC). Unlike the in-between data gaps left by the periodic review, agentic systems carry on monitoring transaction flows and external data sources regularly. The agent takes the initiative on its own by refreshing customer due diligence profile whenever a “trigger event” occurs, e.g., change in beneficial ownership or a new sanctions hit.

Resolving the False Positive Dilemma in Financial Crime

In the fraud detection and anti-money laundering realm, the first line of defense (1LoD) is faced with a staggering number of false positives, exceeding 90% in the legacy rule-based systems. This is a problem that takes ample time of the investigators as it requires them to clear legitimate transactions instead of investigating the real threats and focusing on the strategic tasks.

Agentic AI fundamentally changes this scenario by processing transactions “in context” instead of taking them in isolation. By blending together information from payment records, vendor databases, and chat patterns, agentic fraud agents can diminish false positives to the extent of up to 80%.

Unlike static rules, these agents utilize adaptive learning. Each confirmed fraud or clear alert acts as an input for the model, refining accuracy in real-time. For instance, major players like Standard Chartered have mentioned that they achieved a 40% drop in compliance breaches and were able to solve cases faster by utilizing such predictive and AI-driven verification.

The Skeleton of Autonomy – Event-Driven Architecture

For Chief Risk Officers and Chief Technology Officers, it is vital to comprehend that Agentic AI requires a sophisticated infrastructure. The autonomous agents need to be driven by data that is continuously being generated. Traditional cycle-based processing cannot operate in real-time risk management.

To promote agentic workflows, banks are opting for Event-Driven Architectures (EDA) installed with technologies like Apache Kafka and Flink. The architecture built this way would act as the central nervous system, carrying events such as transaction logs, login signals, and market moves to agents in real-time.

As we progress toward an Agent Mesh, the communication model becomes “intent streams.” Herein, the agent doesn’t just pass on data; it also conveys an objective. An agent tracking a suspicious login can autonomously propose an intent that would, costs and security being resolved automatically, communicate with the client. This makes the entire process chain resilient and self-healing.

Governance – The “Glass Box” Requirement

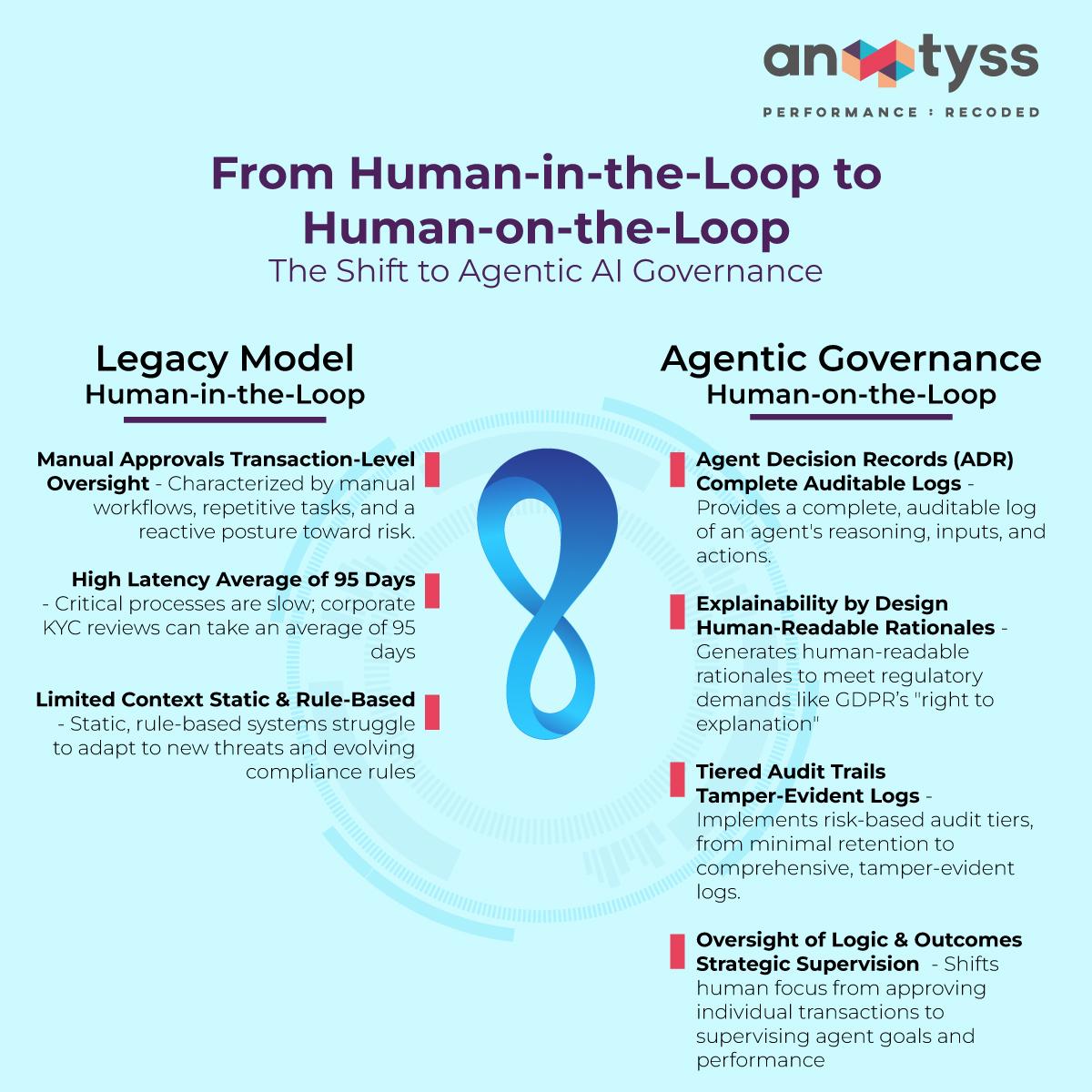

The escalating independence of Agentic AI has naturally created effects related to governance and “black box” style decisions. Regulators require complete transparency. Per legal standards such as GDPR and the EU AI Act, “it was the algorithm that decided” is not a valid excuse.

To deploy Agentic AI safely in the 1LoD, institutions must implement Agent Decision Records (ADR). These provide an immutable, comprehensive log of the reasoning behind an agent’s action—documenting which data sources were consulted, which business rules were applied, and the confidence score of the decision.

This shifts the governance model from “Human-in-the-Loop” for every transaction to “Human-on-the-Loop” for oversight of the system’s logic and outcomes. By implementing tiered audit trails that capture context and reasoning, banks can satisfy regulatory requirements for explainability while reaping the efficiency benefits of autonomy.

Conclusion

The integration of Agentic AI into banking operations is not an optional upgrade; it is a strategic necessity for survival in an asymmetric risk landscape. Institutions that successfully deploy these digital workforces will not only slash operational costs but will create a robust, 24/7 First Line of Defense that predicts and prevents risks before they materialize.

The question is no longer if AI will reshape banking, but how leaders will architect their organizations to harness it.

Navigating the transition from legacy operations to an agentic-first enterprise requires deep domain expertise and a clear roadmap. At Anaptyss, we help financial institutions design and deploy solutions that integrate Generative and Agentic AI directly into the core of their business operations.

To dive deeper into the architectural and operational steps required for this transformation, we invite you to read our comprehensive white paper – Reimagining Banking Functions: How Generative and Agentic AI are Shaping the Future.